Configuring Agent Guidance to work with ChatGPT

Author: Geouffrey Erasmus | Date: 27/07/2023

Open AI’s large language model (LLM), ChatGPT, has made headlines all around the world due to its natural language capabilities, ease of use, and accessibility.

People are using ChatGPT to write songs or poems, create recipes, and do their work, such as writing or fixing their code.

The potential of LLMs to support our lives can’t be understated.

But what implications will ChatGPT and other LLMs have for the contact center?

Awaken CTO, Geouffrey Erasmus, spoke to our Head of Innovation, Andrew Hemingway, who has been looking closely at how LLMs can influence agent guidance in the contact centre, in particular, how tools like ChatGPT can assist agents in providing a more efficient, and satisfying experience for customers.

[GE] Hi Andy, let’s start with what is Intelligent Agent?

Hi Geouffrey, Intelligent Agent is a dynamic scripting and workflow software application for the contact centre. It guides agents by surfacing relevant information at the right time and provides them with the ‘next-best-action’ to achieve the correct outcome for the customer.

It’s a low-code tool, which means minimal support is required from IT to manage it, which is great for operational teams. It utilises a single, universal agent desktop, which helps make the process simpler for agents. In fact, we see training time for new agents drop as much as 60% when using the tool. It’s also deeply integrated with number of leading telephony and other contact centre technologies, which is how it retrieves the right information so quickly and efficiently.

[GE] Thanks, Andy. High level, how powerful could a collaboration like the one between Intelligent Agent and ChatGPT be?

Very, is the short answer.

Due to the way ChatGPT works, that ability to ask it questions as a human and get human-like responses is incredibly powerful.

Suddenly you have the opportunity for agents and operational teams to ask questions or perform ‘actions’ that otherwise would have previously required a technical solution.

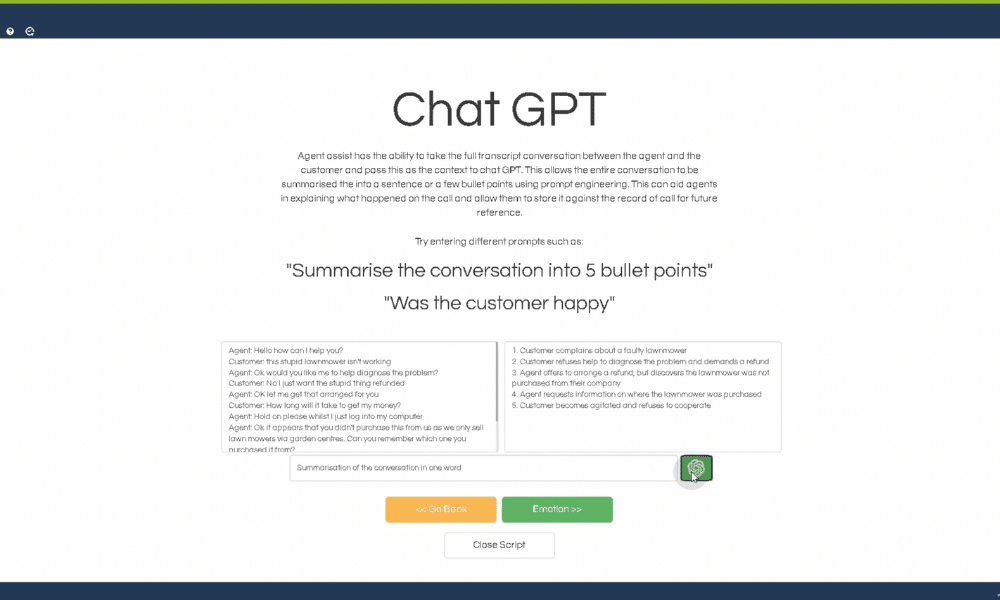

An example would be call summarisation. We can now ask ChatGPT to ingest a call or interaction transcript and say, summarise it in five bullet points. Imagine the time saving for the agent or QA as opposed to reading the whole transcript like they would’ve in the past!

Fig. 1 – ChatGPT has been asked to summarise a call trasncript into five summary bullet points

[GE] What other use cases are there for ChatGPT?

Call summarisation is a really obvious one. An extension of that is how agents interact with Knowledge-Based Articles (KBA) and other information repositories. As opposed to searching endlessly through their own support materials, they can ask ChatGPT to retrieve just the right information and pass that to the customer.

Another use case is based in quality management and how the QA reviews agent performance. ChatGPT can be used to automate the population of the agent scorecard. ‘Did the agent offer a greeting?’. ‘Yes, or no?’ ‘Did the agent read the compliance statement correctly?’. ‘Yes, or no?’

Again, another very significant time saving that enables the QA team to review and score 100% of interactions in an automated fashion.

[GE] How do we configure ChatGPT to work with Intelligent Agent?

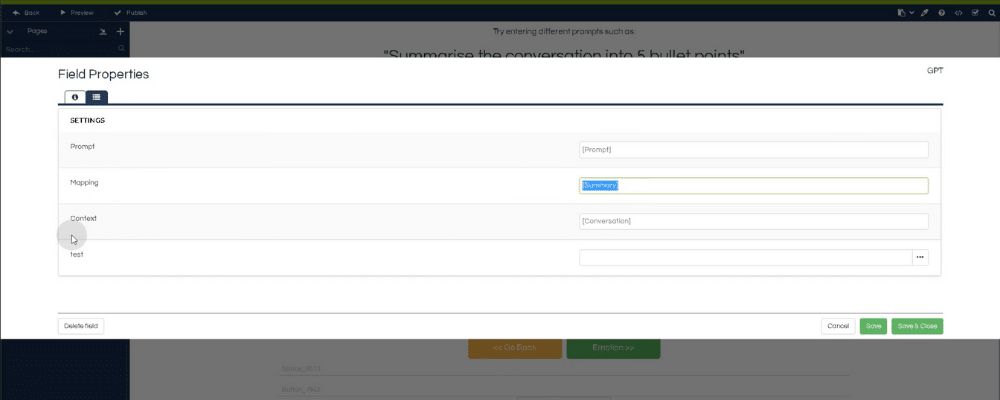

We’ve configured ChatGPT to work off a series of prompts, which can be hidden in buttons or options that the agent can invoke.

i.e. Having a button labeled ‘Call Summary’ that automatically sends the transcript to ChatGPT for summarisaton when clicked.

We then set a series of attributes that can be customised to drive the control prompt.

You can find more information on Integrating ChatGPT in this document.

Fig. 2 – We configure ChatGPT as a series of prompts, controlled by customiseable attributes

[GE] What security considerations should people be thinking about?

There are a number of considerations and I’m sure many people heard in the news about some of the issues organisations have faced by feeding their own IP or customer information into these public language models.

Private hosting is obviously a necessity for many businesses but we also believe redaction will be just as important.

Having the ability to remove PII will be especially crucial to the successful implementation of applications, such as ChatGPT.

[GE] Thanks Andy, anything else to add?

This space is moving incredibly quickly. New use cases are being thought up all the time and there is potential to embed LLMs deeply with the contact centre operation. Security is definitely front of mind right now, and rightly so, but the market is working just as hard on that side of the fence as well.

For more information on agent guidance technology or how LLMs can support your contact centre, then get in touch today.